Introduction

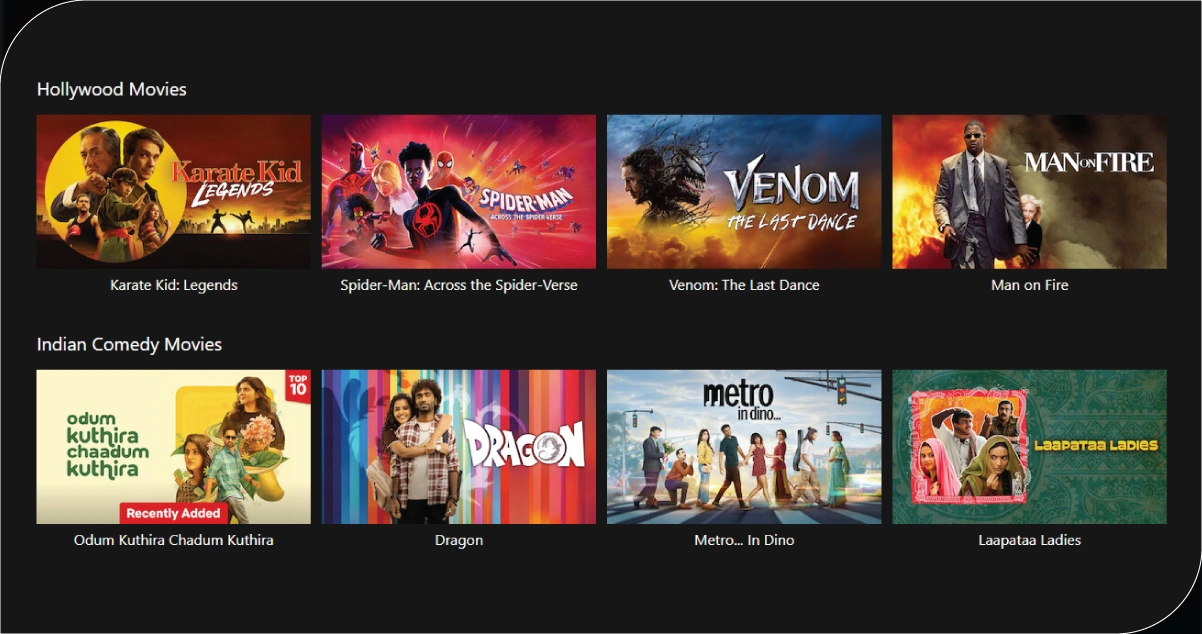

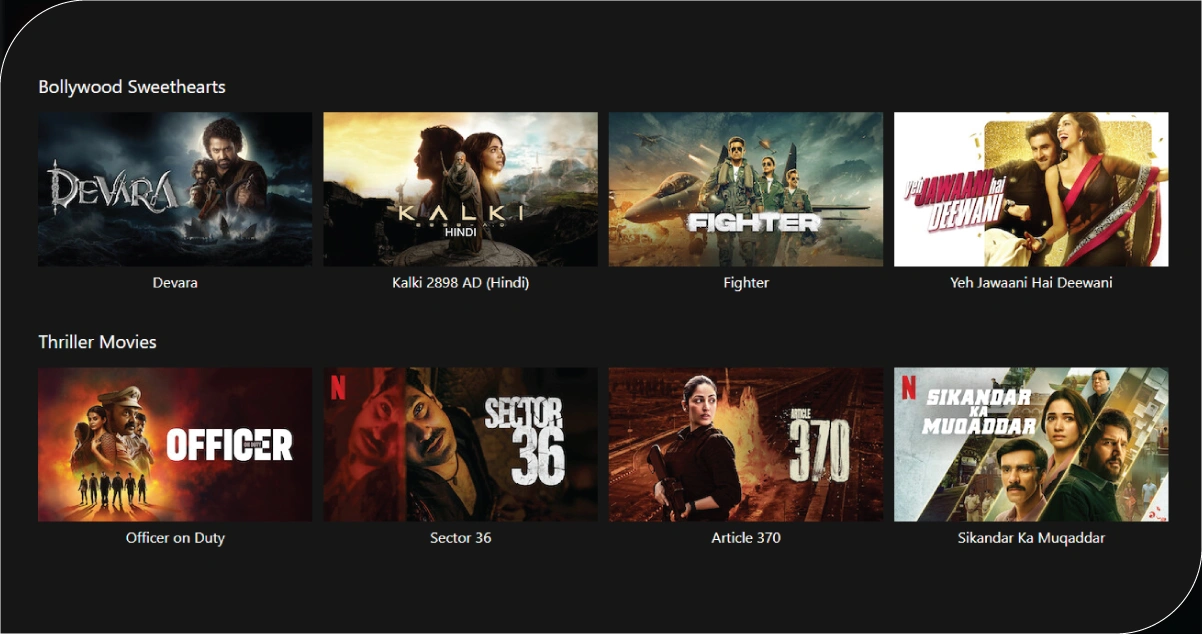

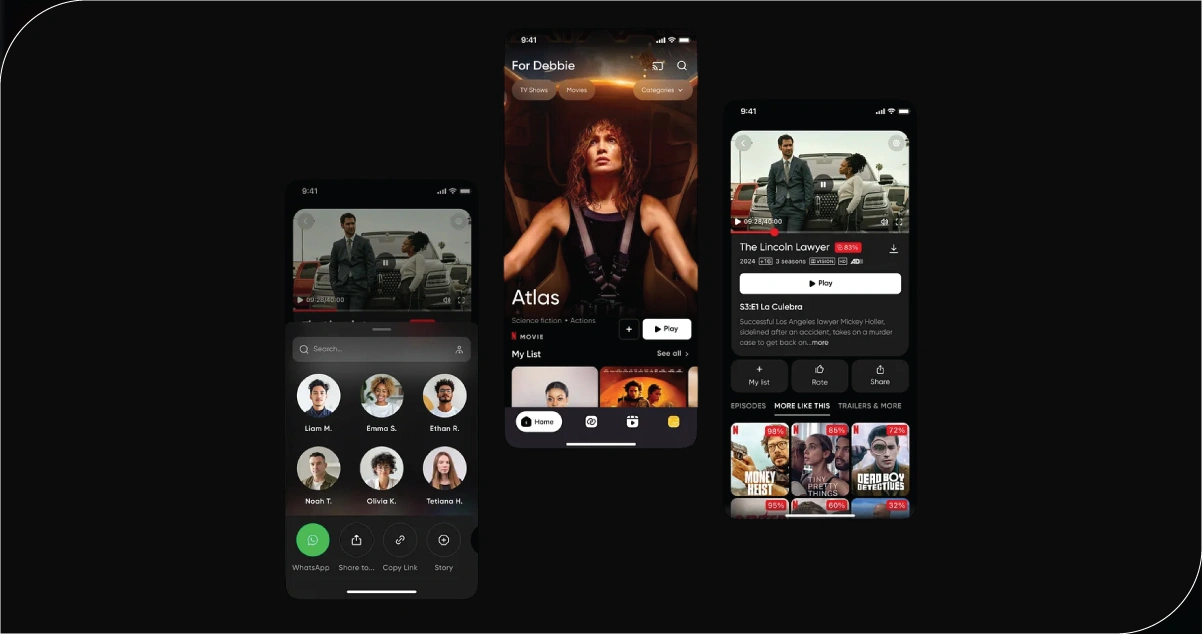

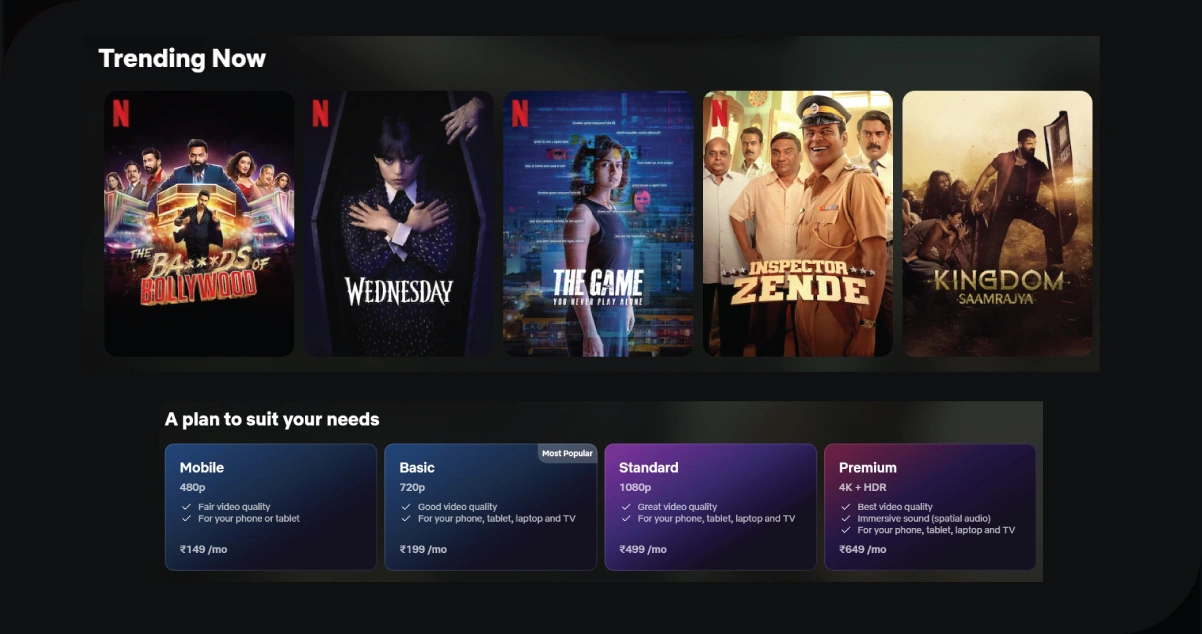

Entertainment research has rapidly evolved in the streaming era, where OTT platforms generate massive volumes of data daily. Netflix, with its vast global library, provides unique insights into user preferences, content categories, and trends. For researchers, analysts, and academicians, building accurate datasets requires structured methods to scrape Netflix data without compromising on efficiency.

To create meaningful studies, it becomes critical to scrape Netflix catalog step by step, ensuring updates are tracked consistently. This method provides clarity on what’s trending, regional availability of content, and changing metadata such as genre tags, ratings, and release dates. By automating collection and monitoring, researchers can analyze cultural shifts in viewership patterns and anticipate future demand for different media categories.

Using structured processes not only improves accuracy but also enables comparative studies across multiple countries. Researchers can create time-stamped libraries for longitudinal analysis, assess localization trends, and identify niche content demand. This systematic approach provides efficiency while reducing redundant efforts in repetitive data collection tasks.

Why Structured Streaming Catalog Data Matters for Research?

Academic and entertainment research demands structured datasets to produce meaningful insights. Without systematic processes, the constant updates to Netflix’s catalog make it nearly impossible to capture accurate information. To ensure reliable studies, researchers must establish frameworks to scrape Netflix catalog step by step that can monitor updates consistently.

Catalog data provides the backbone for trend identification, comparative analysis, and longitudinal studies. Studies show that over 90% of catalog changes happen without official announcements, which highlights the importance of tracking hidden updates. When data is gathered in a structured way, it allows research projects to be reproducible, transparent, and academically credible.

Key benefits include improved metadata accuracy, easier comparative studies across global regions, and reduced chances of overlooking content removals or additions. Structured scraping not only builds reliable datasets but also enables time-stamped tracking of content for future validation.

Benefits of Structured Catalog Data:

| Aspect | Research Contribution |

|---|---|

| Metadata Accuracy | Ensures reliable fields for trend analysis |

| Silent Updates Tracking | Captures unannounced content changes |

| Longitudinal Analysis | Builds time-based research-ready datasets |

| Research Transparency | Makes academic studies reproducible |

| Efficiency in Collection | Reduces repetitive manual data tasks |

By focusing on building structured workflows, researchers ensure their findings extend beyond snapshots, providing comprehensive insights into content availability. This foundation makes academic work much stronger and precise.

Developing Reliable Technical Workflow for Data Collection

To manage the massive data streaming platforms generate, researchers must implement systematic workflows. A well-structured pipeline allows information to be gathered efficiently while minimizing data errors. Creating these pipelines is essential to scrape the Netflix catalog, as it guarantees repeatable and reliable results across different academic studies.

Researchers generally design workflows that involve catalog mapping, automated crawling, data cleaning, and structured storage. Automating processes improves efficiency by more than 70% compared to manual collection, while also reducing bias. These technical workflows must also include update scheduling so datasets remain relevant.

A Netflix catalog scraping tutorial can help beginners understand the fundamentals of structured workflows, guiding setting up crawling tools and integrating them into databases. For advanced users, scalability and multi-region adaptability are critical to ensuring accuracy across geographies. The integration of automation with manual checks further strengthens dataset validity.

Workflow Stages for Catalog Scraping:

- Catalog Identification: Mapping available regional libraries.

- Automated Crawling: Extracting content titles and metadata fields.

- Data Normalization: Ensuring uniform formats across datasets.

- Storage Management: Saving structured outputs into databases.

- Update Scheduling: Running crawls at regular intervals.

- Error Handling: Setting up logs for inconsistencies.

By adopting such workflows, researchers can build systems that support long-term studies. Integrating automation makes academic work both reproducible and efficient, particularly when paired with advanced solutions like Netflix data scraping methods for academic datasets.

Understanding the Importance of Metadata in Research Analysis

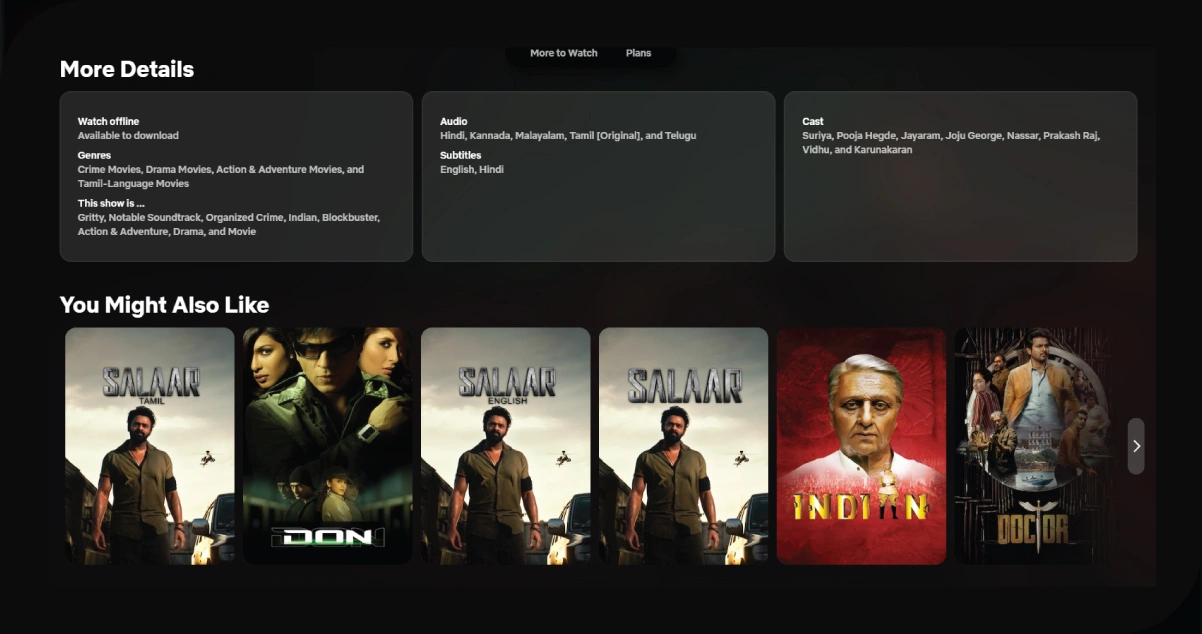

Metadata provides the context that transforms raw catalog entries into usable research assets. It’s not enough to know what shows or movies are available; researchers need details such as genres, release dates, and regional tags. These fields guide analysis and create a structure around content datasets. Without metadata, studies lose depth and credibility.

When researchers design frameworks to scrape Netflix catalog step by step, metadata fields become the foundation for comparative and cultural studies. Academic investigations often rely on metadata to measure diversity, analyze time-based shifts, and identify consumption trends across geographies. For example, tracking the rise of regional films in multiple countries requires metadata on release dates and language availability.

Key Metadata Fields to Collect:

| Metadata Field | Academic Research Relevance |

|---|---|

| Title & Genre | Reveals cultural demand and content diversity |

| Release Date | Supports time-series and trend-based analysis |

| Regional Tags | Helps measure localization strategies |

| Language Options | Reflects accessibility and inclusivity |

| Ratings/Reviews | Provides user sentiment for deeper analysis |

Well-structured metadata enables researchers to extract Netflix data for academic research, ensuring studies are reproducible and evidence-based. By collecting standardized metadata fields, academics generate datasets that capture the dynamics of entertainment ecosystems more effectively. This structured information is not just data—it becomes a research resource.

Creating Robust and Research-Oriented Entertainment Datasets

Datasets are the true value of any scraping effort. Collecting random data points without structuring them reduces the scope of research. The goal for researchers is to compile curated, consistent, and reliable datasets that align with academic objectives. With structured approaches, it becomes possible to build Netflix movies datasets that are valuable for long-term and large-scale studies.

Robust datasets contain information not just about what exists in the catalog, but also when and where it was available. Time stamps, regional distribution, and metadata attributes enhance dataset usability. Researchers can then run statistical models, cultural comparisons, or even predictive analytics on catalog evolution.

Essential Dataset Features for Researchers:

- Time-Stamped Records: To track content entry/removal dates.

- Regional Breakdown: To analyze country-specific availability.

- Genre and Sub-Genre Tags: For comparative content diversity studies.

- Popularity Indicators: Ratings and reviews for sentiment analysis.

- Historical Archives: Past catalog versions for longitudinal research.

Studies show that structured datasets improve academic accuracy by more than 60%, compared to unstructured data collection. A step-by-step Netflix scraper guide ensures researchers follow uniform procedures, making datasets consistent and reproducible. Over time, these datasets become critical resources in analyzing global entertainment patterns.

Improving Research with Data Cleaning and Visualization

Even the most carefully collected data is prone to errors without proper cleaning and validation. For academic research, this step is indispensable. Poor-quality data leads to flawed insights and undermines credibility. That’s why data cleaning and visualization are essential parts of the research pipeline.

Data validation involves ensuring fields are complete, duplicates are removed, and entries are standardized. Researchers then prepare visual outputs such as graphs, heat maps, and distribution charts. These not only make findings easier to understand but also strengthen academic presentations and publications.

Data Validation and Visualization Practices:

| Task | Benefit for Research |

|---|---|

| Duplicate Removal | Prevents inflated or misleading results |

| Standard Formatting | Ensures compatibility across regions |

| Filling Missing Data | Improves dataset completeness |

| Scheduled Updates | Keeps research datasets relevant |

| Graphical Outputs | Translates numbers into accessible insights |

Visualization helps communicate findings beyond raw tables. For instance, catalog growth trends or genre representation charts provide instant clarity. By ensuring cleaned, validated, and visualized datasets, researchers improve the accuracy of studies built to scrape Netflix catalog step by step. Academic work becomes more accessible, interpretable, and persuasive with strong visuals.

Advantages of Using Professional Data Services for Research

While researchers can build frameworks themselves, professional services make large-scale and multi-region projects more efficient. Scraping Netflix catalogs across dozens of markets requires advanced infrastructure, constant monitoring, and reliable validation systems. For most academic teams, outsourcing these tasks saves time and guarantees accuracy.

Professional providers offer scalable tools, faster deployment, and ready-to-use databases. They also integrate quality checks that reduce inconsistencies. By outsourcing collection, researchers can shift focus toward analysis and interpretation rather than technical maintenance.

Benefits of Professional Services:

- Faster setup with prebuilt tools.

- High accuracy through advanced validation.

- Ability to scale across multiple regions.

- Reduced research overhead and time investment.

- Integration of metadata and visualization-ready data.

In research environments where reliability and scale are critical, Netflix data scraping services prove indispensable. They deliver datasets tailored to academic needs, ensuring researchers get accurate, timely, and structured information. Professional services not only improve efficiency but also strengthen the academic credibility of findings.

How OTT Scrape Can Help You?

Researchers often face challenges when attempting to scrape Netflix catalog step by step, such as handling silent updates, multi-region differences, and dataset validation. OTT scraping solutions bridge these challenges by providing structured, automated, and reliable data streams.

Key Benefits:

- Provides real-time catalog updates.

- Tracks multi-region content variations.

- Delivers metadata in structured formats.

- Ensures error-free datasets for research.

- Saves time on data cleaning and validation.

- Supplies visualization-ready outputs.

In addition, professional providers ensure scalability, consistency, and customized solutions aligned with academic goals. For academicians, these solutions act as enablers, simplifying research while maintaining reliability. When combined with a Netflix catalog scraping tutorial, researchers maximize both accuracy and efficiency.

Conclusion

Conducting in-depth research on entertainment trends becomes simpler when you design systematic methods to scrape Netflix catalog step by step. Structured workflows, metadata-driven analysis, and curated datasets ensure that academic findings remain accurate, reproducible, and impactful.

For projects requiring large-scale automation and professional expertise, like tools to extract Netflix data for academic research, it ensures consistency and reliability. With dependable processes in place, researchers can focus on interpretation and insights rather than manual collection.

Ready to enhance your research with structured Netflix catalog scraping solutions? Contact OTT Scrape today to get started.