Introduction

In today's fast-paced digital landscape, the volume of news and articles published every minute is immense. From global breaking news to niche investigative stories, organizations depend on this information to drive insights, inform decisions, and maintain a competitive edge. Article Data Scraping enables businesses, researchers, and analysts to collect content from a wide range of sources systematically. However, News And Article Data Scraping isn't just about gathering large volumes of data—it's about ensuring that the data is clean, accurate, and reliable. Poor-quality data can lead to misinformed decisions, inaccurate reporting, and missed market opportunities. Maintaining data integrity is crucial throughout the News Data Extraction process. This blog explores how to effectively extract news and article content, the significance of maintaining high data quality, and best practices to ensure your outcomes are meaningful and trustworthy. Organizations can transform unstructured news into valuable business intelligence with the right strategy.

The Importance of News and Article Data

News and article data serve as a pulse for understanding global trends, consumer sentiments, and industry developments. Businesses use this data for market research, competitive analysis, and public relations. Governments and NGOs leverage it to monitor public opinion and track policy impacts. It's the backbone of content curation and recommendation systems for media companies.

The challenge lies in Article Data Extraction from diverse sources—news websites, blogs, social media platforms, and subscription-based publications. These sources vary in format, structure, and accessibility, making data extraction complex. Moreover, the data must be accurate, relevant, and timely to be useful. This is where Data Quality in Web Scraping becomes essential. Without ensuring high data quality, businesses and organizations risk basing decisions

on flawed or outdated information, undermining the effectiveness of their strategies and insights.

What is Data Extraction?

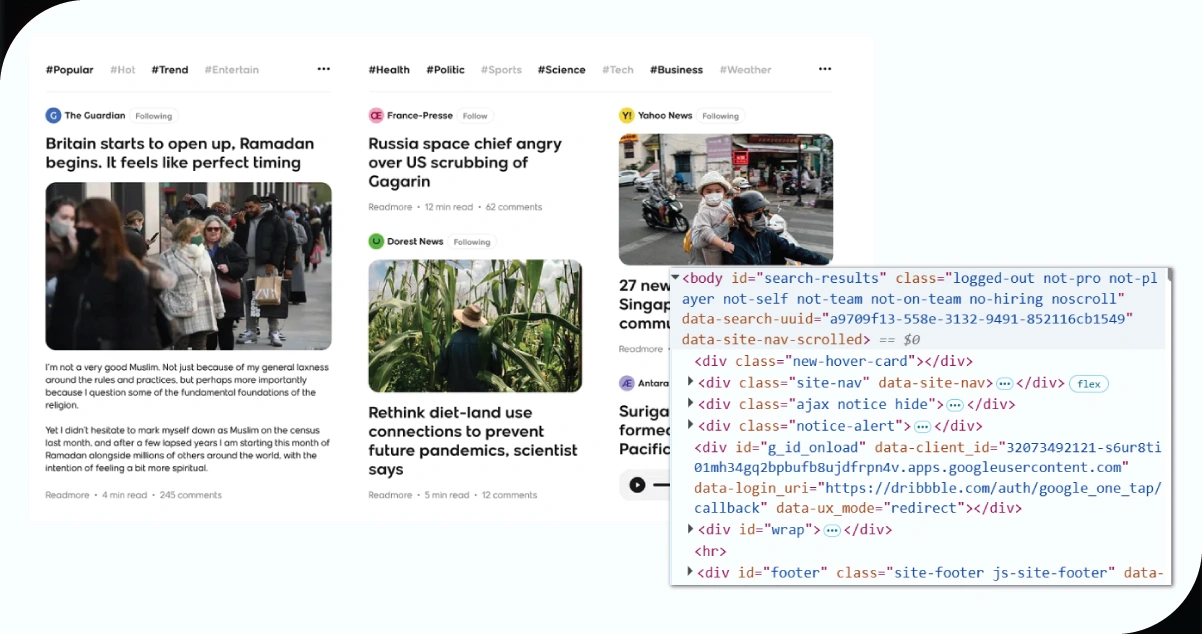

Data extraction involves retrieving specific information from unstructured or semi-structured sources. For news and articles, this typically means scraping text, metadata (e.g., author, publication date), and multimedia (e.g., images, videos) from web pages. Common methods include:

- Data extraction involves retrieving specific information from unstructured or semi-structured sources. For news and articles, this typically means scraping text, metadata (e.g., author, publication date), and multimedia (e.g., images, videos) from web pages. Common methods include:

- Web Scraping: Using tools like BeautifulSoup, Scrapy, or Selenium to extract data from websites programmatically.

- APIs: Leveraging APIs provided by news aggregators like Google News or Reuters to access structured data.

- RSS Feeds: Subscribing to RSS feeds for real-time updates from news outlets.

- Manual Extraction: Collecting data manually for small-scale or particular needs (less often due to inefficiency).

Each method of data extraction has its trade-offs. Web scraping is flexible but prone to errors if websites change their structure. APIs offer structured data but may have rate limits or access restrictions. RSS feeds are reliable for updates but limited in scope. Regardless of the method, the extracted data must meet quality standards to be actionable. This highlights the Importance of Data Quality—ensuring that the data is accurate, complete, and timely is crucial for effective decision-making and analysis.

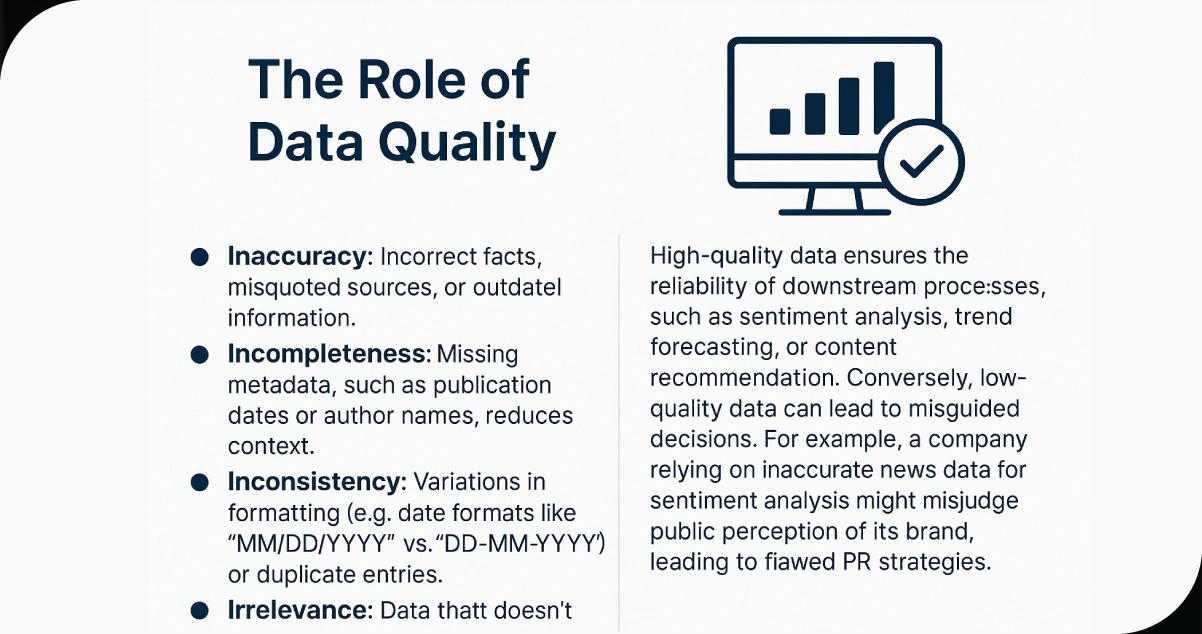

The Role of Data Quality

Data quality refers to the accuracy, completeness, consistency, timeliness, and relevance of data. In the context of news and article data, poor quality can manifest in several ways:

- Inaccuracy: Incorrect facts, misquoted sources, or outdated information.

- Incompleteness: Missing metadata, such as publication dates or author names, reduces context.

- Inconsistency: Variations in formatting (e.g., date formats like "MM/DD/YYYY" vs. "DD-MM-YYYY") or duplicate entries.

- Irrelevance: Data that doesn't align with the intended use case, such as off-topic articles.

- Untimeliness: Delayed extraction that renders time-sensitive news obsolete.

High-quality data ensures the reliability of downstream processes, such as sentiment analysis, trend forecasting, or content recommendation. Conversely, low-quality data can lead to misguided decisions. For example, a company relying on inaccurate news data for sentiment analysis might misjudge public perception of its brand, leading to flawed PR strategies.

Challenges in Extracting News and Article Data

Extracting news and article data with high quality is fraught with challenges:

1. Dynamic Web Structures: News websites frequently update layouts, breaking web scrapers relying on fixed HTML structures.

2. Access Restrictions: Paywalls, CAPTCHAs, and IP bans limit access to premium content.

3. Data Volume: The sheer volume of daily articles requires scalable extraction pipelines.

4. Multilingual Content: Global news sources publish in multiple languages, necessitating translation or language-specific processing.

5. Noise and Clutter: Extracted data often includes irrelevant elements like ads, navigation menus, or boilerplate text.

6. Ethical and Legal Concerns: Web scraping must comply with terms of service, copyright laws, and data privacy regulations like GDPR.

Addressing these challenges requires robust tools, careful planning, and a focus on data quality at every stage of the extraction process.

Best Practices for High-Quality Data Extraction

To extract news and article data effectively while maintaining quality, organizations can adopt the following best practices:

Define Clear Objectives: Before extracting data, clarify the use case. Do you monitor brand mentions, analyze industry trends, or build a content recommendation system? Defining objectives helps determine which data points (e.g., headlines, full text, metadata) are relevant and ensures that only high-value data is collected.

Choose the Right Tools: Select tools that align with your needs:

- For web scraping, use libraries like BeautifulSoup (Python) for simple tasks or Scrapy for large-scale projects.

- For APIs, explore services like NewsAPI or Aylien for structured news data.

- Configure RSS feed parsers for real-time updates using tools like Feedparser (Python). Invest in tools with built-in error handling and scalability to manage dynamic websites and large datasets.

Implement Data Cleaning: Raw extracted data is often messy. Cleaning involves:

- Removing duplicates and irrelevant content (e.g., ads, footers).

- Standardizing formats (e.g., converting all dates to ISO format: YYYY-MM-DD).

- Handling missing values (e.g., imputing publication dates based on context).

- Filtering out low-quality sources (e.g., clickbait or unverified blogs). Automated cleaning scripts can streamline this process, but manual review may be needed for complex cases.

Validate Data Accuracy: Cross-reference extracted data with trusted sources to ensure accuracy. For example, verify article dates against publication timestamps or check author names against official bylines. Natural language processing (NLP) tools detect inconsistencies, such as contradictory facts within articles.

Ensure Timeliness: News data is time-sensitive. Set up automated pipelines to extract and process data in real time or near real time. For web scraping, schedule crawlers to run at regular intervals. For APIs, configure webhooks to receive updates as soon as new articles are published.

Address Ethical and Legal Issues: Respect the website's terms of service and robots.txt files when scraping. Use APIs whenever possible to avoid legal risks. If collecting personal data (e.g., author names), comply with privacy regulations like GDPR or CCPA. Transparency with data sources builds trust and reduces legal exposure.

Monitor and Maintain Pipelines: Extraction pipelines require ongoing maintenance. Monitor for errors, such as broken scrapers due to website changes, and implement alerts for anomalies (e.g., sudden drops in data volume). Regularly update tools and scripts to adapt to evolving web technologies.

Leverage Quality Metrics: Track data quality using metrics like:

- Accuracy Rate: Percentage of data points verified as correct.

- Completeness Score: Percentage of records with no missing fields.

- Consistency Index: Degree of uniformity in data formats.

- Freshness: Average time lag between publication and extraction. These metrics help identify weaknesses in the extraction process and guide improvements.

The Business Impact of Data Quality

Investing in high-quality news and article data yields significant benefits:

- Improved Decision-Making: Accurate data leads to better insights for market strategies or policy decisions.

- Enhanced Customer Experience: High-quality data powers personalized content recommendations, boosting user engagement.

- Operational Efficiency: Clean, consistent data reduces the need for manual corrections, saving time and resources.

- Competitive Advantage: Timely, relevant data helps organizations stay ahead of trends and competitors.

Conversely, poor data quality can have costly consequences. A 2021 Gartner study estimated that organizations lose an average of $12.9 million annually due to insufficient data. In news-driven industries, these losses can manifest as missed opportunities, reputational damage, or regulatory fines.

Case Study: Sentiment Analysis for Brand Monitoring

Consider a company using news data to monitor brand sentiment. By extracting articles from reputable sources and ensuring high data quality, the company can:

- Accurately gauge public perception through NLP-based sentiment analysis.

- Identify emerging PR risks by tracking negative coverage in real-time.

- Tailor marketing campaigns based on positive media mentions. Without data quality, the analysis might include irrelevant articles, outdated news, or inaccurate sentiments, leading to misguided strategies.

How OTT Scrape Can Help You?

- Comprehensive Content Insights: Extract detailed data on movies, TV shows, genres, ratings, and release dates from platforms like Netflix, Amazon Prime, and Disney+.

- Competitive Analysis: Analyze content offerings across multiple platforms to understand market trends, viewer preferences, and competitor strategies.

- Real-Time Updates: Get timely and accurate data on new releases, trending shows, and updated content to stay ahead of the curve.

- Personalized Recommendations: Use scraped data to build or improve recommendation algorithms based on user preferences and content trends.

- Data for Research and Reporting: Support market research, academic studies, and business intelligence with structured, high-quality streaming content data.

Conclusion

Extracting news and article data offers immense value, but its effectiveness depends on data quality. Overcoming challenges like dynamic web structures, access restrictions, and irrelevant content is key to building robust extraction pipelines. Implementing News Data Scraping Techniques ensures efficient collection of relevant data while maintaining accuracy. By defining clear objectives, cleaning the extracted content, and continuously monitoring data quality, organizations can guarantee High-Quality Data Extraction. This approach ensures that the data is accurate, complete, and timely. Data Extraction from News Articles becomes a strategic asset in a world driven by information. Organizations prioritizing data quality will improve their decision-making and gain a competitive advantage by turning raw news data into valuable, actionable insights.

Embrace the potential of OTT Scrape to unlock these insights and stay ahead in the competitive world of streaming!